Learning sensori-motor mappings using little knowledge: application to manipulation robotics

| Summary and data subjects : |

The thesis is focused on learning a complex manipulation robotics task using little knowledge. More precisely, the concerned task consists in reaching an object with a serial arm and the objective is to learn it without camera calibration parameters, forward kinematics, handcrafted features, or expert demonstrations. Deep reinforcement learning algorithms suit well to this objective. Indeed, reinforcement learning allows to learn sensori-motor mappings while dispensing with dynamics. Besides, deep learning allows to dispense with handcrafted features for the state spacerepresentation.

However, it is difficult to specify the objectives of the learned task without requiring human supervision. Some solutions imply expert demonstrations or shaping rewards to guiderobots towards its objective. The latter is generally computed using forward kinematics and handcrafted visual modules. Another class of solutions consists in decomposing the complex task. Learning from easy missions can be used, but this requires the knowledge of a goal state. Decomposing the whole complex into simpler sub tasks can also be utilized (hierarchical learning) but does notnecessarily imply a lack of human supervision. Alternate approaches which use several agents in parallel to increase the probability of success can be used but are costly. In our approach,we decompose the whole reaching task into three simpler sub tasks while taking inspiration from the human behavior.

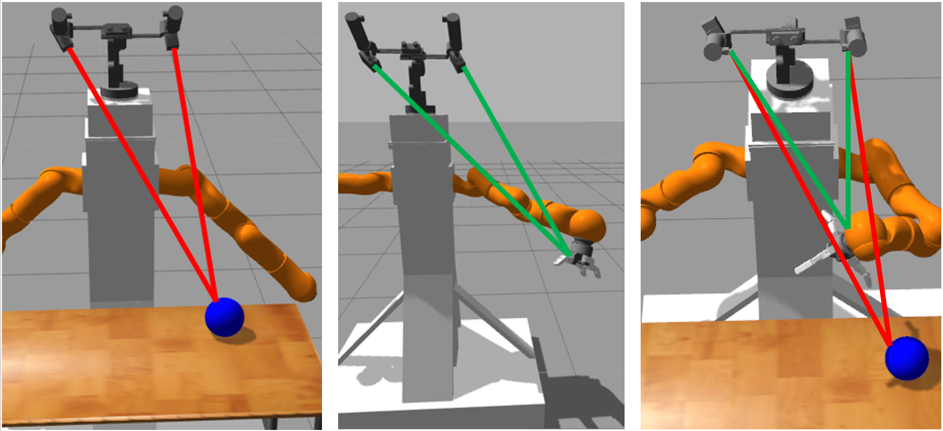

Indeed, humans first look at an object before reaching it. The first learned task is an object fixation task which is aimed at localizing the object in the 3D space. This is learned using deep reinforcement learning and a weakly supervised reward function. The second task consists in learning jointly end-effector binocular fixations and a hand-eye coordination function. This is also learned using a similar set-up and is aimed at localizing the end-effector in the 3D space. The third task uses the two prior learned skills to learn to reach an object and uses the same requirements as the two prior tasks: it hardly requires supervision. In addition, without using additional priors, an object reachability predictor is learned in parallel. The main contribution of this thesis is the learning of a complex robotic task with weak supervision.

Data subjects: manipulation robotics, deep reinforcement learning, weakly-supervised learning

| Illustration : |

| Publication : |

Learning of binocular fixations using anomaly detection with deep reinforcement learning, F.de La Bourdonnaye, C.Teuliere, J.Triesch, T.Chateau, IJCNN 2017

Apprentissagepar renforcement profond de la fixation binoculaire en utilisant de la détection d’anomalies, F.de La Bourdonnaye, C.Teuliere, J.Triesch, T.Chateau, ORASIS 2017

Learning to touch objects through stage-wise deep reinforcement learning, F.de La Bourdonnaye, C.Teuliere, J.Triesch, T.Chateau, IROS 2018

Stage-wise learning of reaching using little knowledge, F.de La Bourdonnaye, C.Teuliere, J.Triesch, T.Chateau, Frontiers in Robotics and AI 2018

Within Reach? Learning to touch objects without prior models,F.de La Bourdonnaye, C.Teuliere, T.Chateau, J.Triesch, accepted in ICDL-EPIROB 2019