Design of a cooperative vision system based on silicon retinas to address ADAS and AD applications

| Summary and data subjects : |

This project has been funded and carried by Renault Group because the perception in AD (Autonomous Driving) and ADAS (Advanced Driving Assistance Systems) still fails in many user cases despite tremendous efforts in both industrial and research laboratories, and despite huge system’s complexity and computation load. The future of mobility, even autonomous, must also be respectful to the environment, and the consumption of embedded systems must decrease. These two tendencies seem to diverge at some point, then new strategies should be taken. The neuromorphic approach tries to design perception systems inspired by the nature, but the asynchronous and analog architecture of nervous systems in the biology is far different from traditional computing system commonly used. This work enquires about the advantages of artificial retinas for automotive applications, more specifically to the Dynamic Vision Systems (DVS) designed by Delbruck’s team in the ETH Zurich. These new sensors now spread into many application domains and few industrial prototypes have been announced by top semiconductors supplier such as Samsung.

| Description : |

Each pixel of the DVS sensor outputs changes in brightness in the scene, giving access to its timestamp and its polarity. This behavior supposes many analog operations inside the pixel and a new data representation to asynchronously manage the detected changes. It also removes redundancies compared to a traditional frame-based approach, enabling the sensor to adapt its sampling to the scene as much as needed, and up to several kHz. But to manage any kind of speed which can be generated by complex automotive scenarios, new strategies must be designed to treat the temporal information. Many spatiotemporal features already exist and can be used by classifiers, but the advantage against frame-based algorithms is still unclear to us.

This work is organized around three questions:

- How different are DVS characteristics compared with traditional approaches?

- How conventional frame-based approaches can be adapted to event-based data, and how to process an event when it comes?

- How a cooperative video system between targets can be set with these new sensors?

| Illustration : |

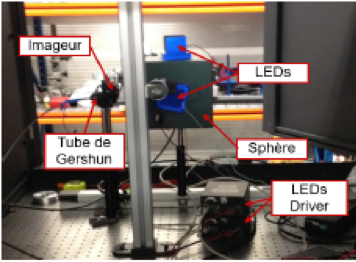

Sensor Characterization

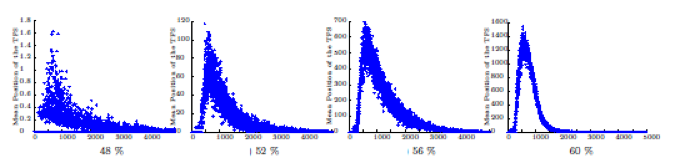

Sensor response as a function of contrast level

Sensor Simulation as a function of sensitivity

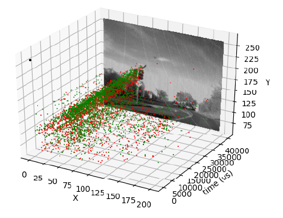

Event based data:

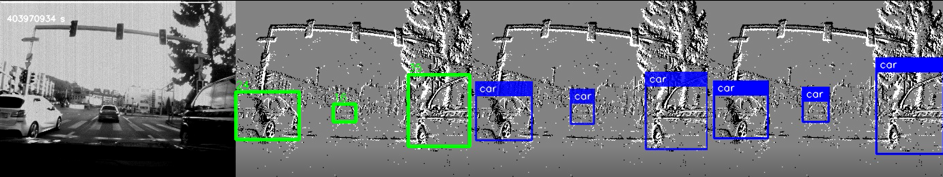

Detection and classification:

| Publication : |

Damien Joubert, Mathieu Hébert, Hubert Konik, and Christophe Lavergne, “Characterization setup for event-based imagers applied to modulated light signal detection,” Appl. Opt. 58, 1305-1317 (2019)

Damien, J.; Hubert, K. and Frederic, C. (2019). Convolutional Neural Network for Detection and Classification with Event-based Data.In Proceedings of the 14th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications – Volume 5: VISAPP, ISBN 978-989-758-354-4, pages 200-208. DOI: 10.5220/0007257002000208